The Rise of Diffusion Models in Generative AI

Generative adversarial networks once dominated the conversation about synthetic images, but a new family of models has taken center stage. Diffusion models, originally inspired by statistical physics, are now powering some of the most advanced systems in text-to-image generation. Their strength lies in stability, scalability, and the quality of the images they produce.

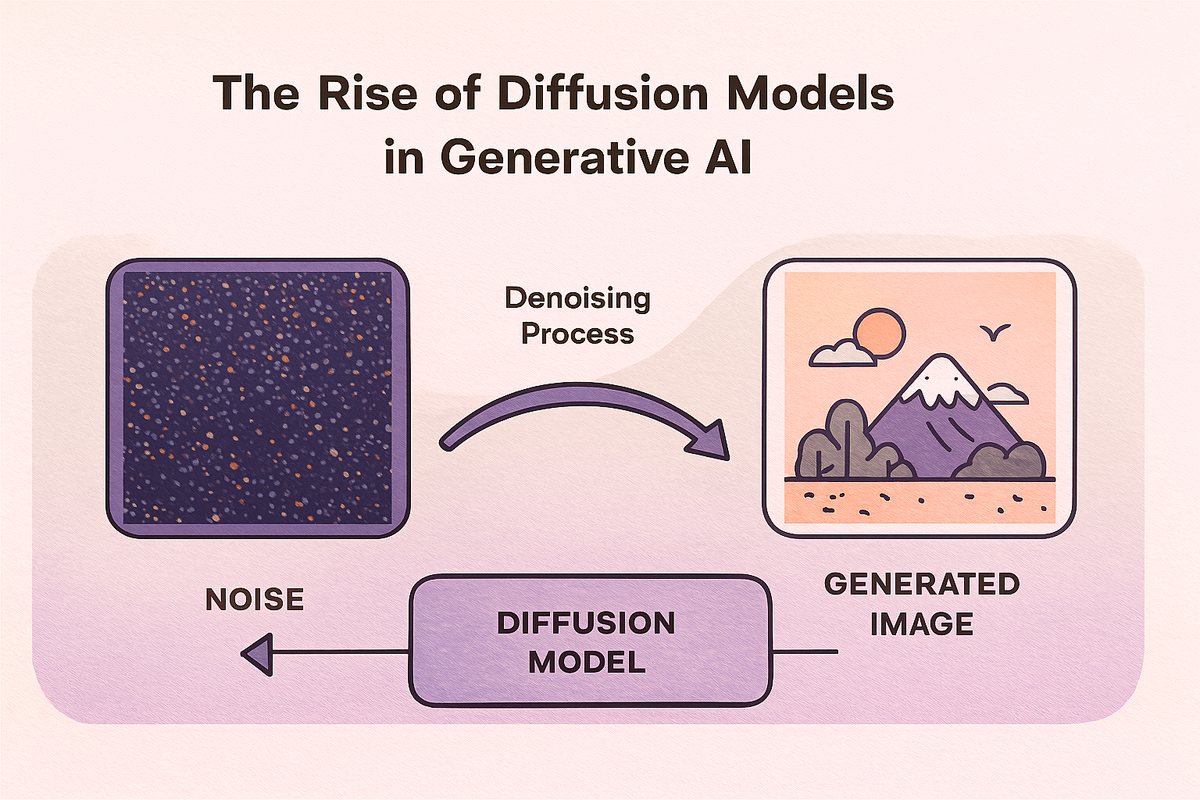

The process is conceptually simple. A diffusion model learns to reverse the gradual addition of noise to an image. During training, it takes clean images, corrupts them step by step, and then learns how to denoise at each stage. At inference, the model starts from pure noise and applies the learned denoising process in reverse, ultimately producing a coherent image.

This framework has several advantages over earlier generative models. Training is more stable than in adversarial setups, since there is no competition between generator and discriminator. The iterative denoising process also allows for fine-grained control over the generation pathway, which can be guided by text prompts, semantic maps, or other conditions.

The scalability is striking. Diffusion models improve steadily with more parameters, more data, and longer training, showing less tendency to saturate than GANs. Their ability to capture fine detail has made them the backbone of systems like Stable Diffusion and DALL-E 3. Beyond images, extensions are being built for audio, video, and even molecular design.

Challenges remain in efficiency. Generating a single image requires dozens or hundreds of denoising steps, making inference slower than GAN-based approaches. Researchers are addressing this with acceleration methods such as denoising diffusion implicit models (DDIM) and consistency models, which cut the number of steps without sacrificing quality.

Diffusion models represent more than a trend in generative AI. They embody a shift toward probabilistic, physics-inspired approaches that trade adversarial competition for iterative refinement. As acceleration techniques mature, they are likely to become the foundation of generative modeling across domains.

References

https://arxiv.org/abs/2006.11239

https://arxiv.org/abs/2010.02502

https://stability.ai/blog/stable-diffusion-public-release